by

Daniel Burstein, Director of Editorial Content

In the

MarketingSherpa Ecommerce Benchmark Study survey, we asked:

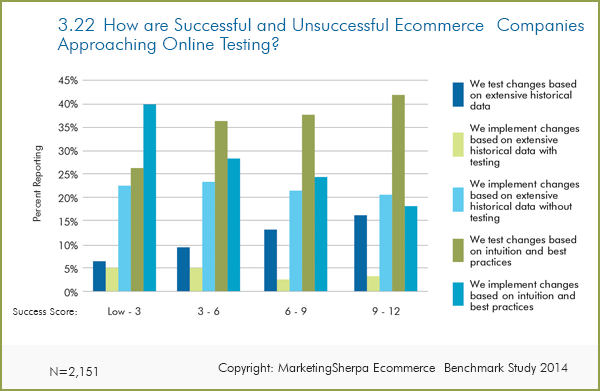

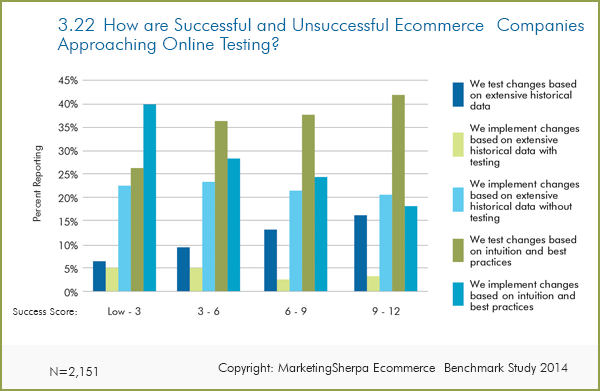

Q. What best describes your company's website testing and optimization strategy?We then correlated this data against the respondent's median weighted success score* and created the below chart, which is followed by analysis and responses from Benchmark Study survey participants in this week’s article:

Click here to see a printable version of this chart

Test your way to success

Even just glancing at the data in the above chart quickly reveals a clear trend line.

The number of companies that " ... implement changes based on intuition and best practices" drop as the success score increases and make up, for example, 40% of the companies with a success score below 3.

On the flip side, the number of companies that " ... test changes based on intuition and best practices" and " ... test changes based on extensive historical data" increase up and to the right as the success score increases. For example, 42% of the companies with a success score between 9 and 12 (the highest score of any respondent was 14) indicated that they tested changes based on intuition and best practices.

How to increase conversion

The reason ecommerce companies that engage in behavioral testing are more successful is because these companies are able to better learn what their customers want. They can then apply this knowledge to increase conversion instead of making changes based on guesses, gut feeling, intuition or best practices (in other words, what worked for someone else’s customers).

As one marketer replied in the Benchmark Study survey, "It's very important that before you implement any changes on to your website that there is some A/B testing done. Without data how are you going to know what works and what didn't work?"

Testing and optimization challenges

That being said, testing isn’t without its challenges, which is probably why some of the respondents have not attempted it.

Those changes range from the political …

"Most of our clients only want a website … they also don't understand the value and benefits of analytical tools on the back end to measure results and to modify marketing campaigns and testing for better conversion."

… to the technological. Some other top challenges marketers listed were:

- "Usability and the lack of A/B testing. Other technology issues."

- "Trying to conduct various A/B tests from a .NET platform."

Using A/B testing to get to the next level

This doesn’t mean you need to test everything. Testing, like any other tactic, is an investment, and you should only use it where you can get the best return.

You can test to discover why you have conversion leaks in your funnel, as this respondent did — "Conversions are down. Not sure if it’s tied directly to the economy or to the offer. Testing shows a little of both."

You can test to help you decide which channels are most worth investing in:

"By marketing in multiple areas (retargeting, display, PPC, organic, affiliates and email), it is tough to track your actual ROI on customers and find which channels attribute the most revenue. Keeping a simple model to A/B test is an important key to our marketing success but is a huge challenge in many organizations."

Once you’ve identified some obvious problems in your conversion funnel using analytics, you can test to discover if and why the changes you think will matter actually do have an impact and decide what the next steps for improvement are. That's what this respondent did:

"Our conversion funnel from 'Cart start' to 'Purchase confirmation' was a disaster — too many steps, too much abandonment and not enough messaging within the funnel to help navigate the customer. We did some thorough analysis on our purchase funnel. We identified all of the major drop out points (abandonment) and identified steps that could either be eliminated, skipped or consolidated. Our purchase funnel went from 12 steps down to six. We still have some testing and optimizing to do."

Once you’ve discovered the power of using testing to better learn what your customers want, and the conversion gains that result, it is easy to get excited about the practice. On that note, I’ll leave you with the passionate words from the Benchmark Study survey respondent:

"Test, Test, Test! And then test again!"

*

The weighted success score was created by Dr. Diana Sindicich, Senior Manager, Data Sciences, MECLABS Institute (parent company of MarketingSherpa). It includes factors such as financial metrics (e.g., year-over-year difference in annual and ecommerce revenue), and you can read more about these metrics on page nine of the Benchmark Study.

Related resources

MarketingSherpa Ecommerce Benchmark Study — Made possible by a research grant from Magento, an eBay company

Price Testing: Order of price increases revenue 51% per visitor for Portland Trail BlazersEcommerce Research Chart: The value of analytics and dataMarketing Strategy: What is your "Only Factor"?Ecommerce Research Chart: How reputation affects success (and 5 ways to improve it)